Army of Me

Further reflections on our robot overlords

In 1997, chess grandmaster Gary Kasparov was beaten by IBM’s Deep Blue for the first time. “The machine hasn’t proved anything yet!” yelled Kasparov, biting the head off a pawn and drinking the thick black ooze that welled from within.

“If we were playing a real competitive match I would tear down Deep Blue into pieces!”

This is exactly the kind of opening paragraph ChatGPT would write - based on widely available information, drawing on an obvious comparison and partially made-up.

In fact I put the prompt “write a very short drop para style introduction to an article about AI taking over writing referencing kasparov's defeat to deep blue” and it spat out something vaguely similar in a few seconds which made me feel exactly like Kasparov in the above paragraph.

The final meta flourish might mark me out as uniquely human, but probably not for long!

For anyone who makes their living writing, this event is about as welcome as the arrival of a big kid in the playground you’ve ruled since the first day of school with pristine Reeboks and a fist of iron. The impulse is to bury your head in the sandpit or run and tell teacher - only to find the sand is ankle-deep and the teacher’s their dad…and he thinks AI is crucial to the future of the British economy.

Sadly I cannot think of an example of a widely useful new technology that hasn’t been adopted because it made me, personally, feel bad. This is a shame. I feel I should have more say but it seems the world…disagrees? This is not to say writers shouldn’t engage in debates and activism around how it’s trained, how it’s used and who gets paid. Just that, as more people want access to cheap writing than get paid to write and some of them own private jets, LLMs are probably here to stay.

Cry - and believe me, I will - more!

In an opinion piece for Time in 2023, the comedy writer Simon Rich said he’d give it five years before AI was able to beat any comedy writer in a blind creative taste test and shared the following fake Onion headline, which he said was by another LLM, code-davinci-002, that wasn’t hamstrung by ChatGPT’s obligation to be bland and corporate:

“Budget of New Batman Movie Swells to $200M as Director Insists on Using Real Batman.”

Oh no! This is a good joke. Words cannot describe the panicked urgency with which I looked for evidence that this was, in fact, written by a human.

But Rich was quick to dash my hopes.

I’m also aware that you might suspect, based on my life’s work up until this point, that I’m trying to perpetrate some kind of hoax. That’s why I’ve collaborated with Brent Katz and Josh Morgenthau to edit a book called I Am Code…Not only has it cleared the legal departments of both our U.S. and U.K. publishers, it has gone through both an internal and external fact check. I’m hopeful these bona fides will erase the taint of my involvement.”

What’s worse, my own dabbling with ChatGPT confirms it’s got much funnier since I wrote this bad-tempered piece in which I admitted I was evaluating its efforts “with all the open-minded curiosity of farmhand sneaking up on a threshing machine with a fixed grin and a wrench behind their back.”

In fact, there are parts of my job which if - and big if - they resolve the plagiarism issue, it’s already well-positioned to replace. I’m not going to spell out exactly what those are because I’m not a grass (too much of a former naughty kid), but jobs that involve churning out joke-flavoured noise to tight deadlines? Yeah... Human writers might come in to define the parameters and establish the tone but once there’s an dataset which a chatbot can riff off? Hmm.

To compound its offences, the LLM is also pretty good at sketch parody. This is perhaps not surprising as it involves predicting outcomes based on large datasets so any prompt that is basically “combine dataset X with dataset Y” is going to play to the chatbot’s strengths. For example I asked it to write me a “weirdly funny ad parody about a wizard selling magic staffs.” It did it in about five seconds and, I’m going to be honest, the results made me laugh.

"Don’t ask how we got 'em, just look at that shimmer!"

— ChatGPT “shaming the robot in me” (from I, Lolbot) by exposing some of the commonplaces of ad parodies in a way that made me feel all dirty.

For kicks, I also asked it to write me a poem about Starbucks in the style of Rimbaud which produced the line “the espresso shrieked like a dying horse.” I’m sorry but haha. I’ve always liked the Robert Frost quote, “no tears in the writer, no tears in the reader” but it seems I will now be forced to amend it to “nothing but algorithmic token processing in the writer…some laughter in the reader?” which just… doesn’t have the same ring to it.

Sitcom-wise, given a reasonable premise, it can spit out quippy dialogue in the style of the sort of forgettable show you’d turn off in disgust moments after googling the writers to see how big their pool is (guess what, it’s big!)

WOMAN

Honestly? It’s more of a “do you want to share this tiramisu before I become a human suitcase for a watermelon” kind of thing.-A quote from mine and ChatGPT’s as-yet-untitled sitcom about a woman being forced to disclose to her Hinge date she’s pregnant with her previous Hinge date’s baby.

Will they fall in love and raise the baby together? I don’t know, as the outcome will be wrestled howling from the vagina of the collective consciousness. Stay tuned!

It’s enough to use as a starting point if you’ve got no dignity. (Which I do. Just). But it all feels a bit hollow as the bot can’t do emotional truth with enough veracity to feel both surprising and honest. Which figures. I guess “Would a person do, feel or say this?” is not a question a robot is best-placed to answer.

Beyond dialogue, the bot couldn’t produce a convincing beat sheet without an already narrative-rich prompt (e.g. by elaborating or stealing), suggesting that story structure unlike argument structure is something that cannot reliably be extracted from large datasets…for now. This is reassuring since beyond “beginning” “middle” and “end” even humans struggle to distil the fundamental elements of story and usually end up either inventing their own weird rubric (Dan Harmon) or following their gut (Russell T Davies). Could ChatGPT write the defining book on storytelling by spotting an underlying structure us gristle widgets have missed?

Not ruling anything out at this point. But for now, I suspect LLMs will mainly be used as a prolific but unreliable writing partner - as are many people - and the finer points of story-breaking will remain a job for Team Meat.

Gag-writing is another weak spot.

“Labour’s strategy meetings must be sponsored by GPS—because they keep losing their direction.”

This was the best it could do even when I gave it the prompt “make them funnier you robot fuck”.

Initially I was hoping this weakness was baked in. Usually a satirical one-liner is a novel or cheeky insight expressed punchily; maybe ChatGPT could do the second bit but not the first? But Simon Rich says it’s because, “OpenAI spent a ton of time and money training ChatGPT to be as predictable, conformist, and non-threatening as possible.” Which makes sense since the insight of a joke doesn’t have to be fresh if the expression is. And if AI can predict protein structures it can probably cobble together received opinion convincingly enough to simulate a cutting insight about Keir Starmer. So basically ChatGPT is a cracker joke writer to code-davinci-02’s Jerry Sadowitz and it’s possible in future comedy writers will be employed “solely for the purpose of taking legal responsibility for Chat GPT’s broadsides…an algorithm’s edgelord avatar” as I predicted.

So, you know, shiiiit.

So what does this mean?

Like the shortcomings of a talented colleague who’s not so great on story or annoying in the room it’s tempting to gloat about the LLM’s inadequacies in the hope this will negate its strengths, but the fact remains it can already do far more than I would have predicted a year ago - and I hear it’s got a beautiful spouse and a cottage in Suffolk.

So what does this mean for the wider creative ecosystem?

Working through the economic and creative implication of a technology that can absorb, analyse and regurgitate language faster than I can think is probably beyond the scope of a newsletter called “Madeleine Brettingham’s Luxury Filth”.

But as Kasparov demonstrates, human beings are known for nothing if not their hubris.

Like I said in I, Lolbot, it’ll probably make things date faster in the way the internet, by exposing you to the working of billions of peoples’ brains globally, already has. You see this in the rapid evolution of joke formats on social media. In fact the rise of weird Twitter was arguably produced by the need to distinguish yourself in a crowded market which rendered previously impressive displays of intellectual nimbleness, like irony and puns, underwhelming. A music hall innuendo which charmed and delighted audiences for half a century would probably last about 48 hours on Bluesky. A “man walked into a bar” joke would need dozens of iterations daily to keep it fresh.

My experience suggests it can already provide meaningful first-base notes. For example, I fed this piece into its hungry maw and, after some characteristic brown-nosing, it admitted the central thesis was buried beneath a series of spiralling comic digressions, setting aside its prejudices and zoning in on the main problem in way that, I’ll be honest, human note-givers sometimes struggle to. Did I do anything to address this? Not really, I’m fickle. But as long as you remember that ChatGPT hands you a piping hot shit sandwich and it is your job to discard the bread and feast reluctantly on the shit, it’s useful. But then you have to do that with most people too.

This essay is like watching someone crack jokes in the lifeboat while the Titanic AI pirouettes into the distance, captained by a wizard hawking shimmery staffs… it’s proof that you’re still here, still flexing something a bot can’t quite capture: the idiosyncratic weirdness of being a person, pissed off and laughing anyway.

—Fuck off ChatGPT you fucking lizard

What does this mean? In theory that pieces will need fewer eyes on them but in practice probably that the bar for “finished” is likely to rise higher the same way that word processing led to an increase in the number of drafts you’re expected to churn out before you stop responding to emails and book a holiday to Croatia.

Obviously it lowers the premium on basic formal elegance - as word processing did for spelling and autotune did for hitting the right notes - because chatbots now make this easy enough to achieve for even the most tin-eared fuckwit ever to hammer out a sentence with the heel of their hand.

Could someone with no literacy at all but a fascinating life story write a passable first draft autobiography by dictating it into a sophisticated LLM? If the chatbots finally get their fat metal heads around Robert Mckee, maybe.

Of course in a world where everyone can fly no one can and maybe this very smoothness will become like the factory finish on a repro vase. Will handwriting return as the kitsch ukulele of the chatbot age? I hope not, mine’s terrible. But I think straight parody is about to become low-status the same way social media robbed the pun of its glory. Puns, except the most elaborate, now operate as either the intellectual equivalent of having a limp or a cute little clever-dumb counter-signal like me calling LLMs robots throughout this piece, because I-know-that-you-know-that-I-know better (I hope).

AI, in its attempts to imitate cool by associating itself with cool thing, may make those very things uncool - a bit like Mark Zuckerberg does with clothes.

Outside of parody, we’ll probably develop an eye for the computer-generated in ways that go beyond the obvious, like “uses lots of em dashes and talks to me like a yoga teacher trying to groom me around a mountaintop firepit” (ChatGPT’s current much-celebrated vibe).

Already most of the AI writing that’s caught the public eye seems to fall into three genres: doom-laden concept-driven sci-fi, zany comedy and emotional pablum. If I could describe what the AI excels at in three words it’s this - cringe, quips and doom. This may reflect the tastes of the data-scrapers but I suspect it also reflects to some degree the limitations of the bot.

In everyday use, I’ve already heard friends say they can identify LLM text based on aura and hair-prickles. This will, and is, unleashing an explosion of paranoia as “that’s AI” becomes the new “didn't happen” and will no doubt produce a flurry of elaborate (but, for the social immune system, necessary) hoaxing as we work out just how good the chatbots are at being us.

At the moment writers are encouraged to be generous with ideas over execution because the latter’s the difficult bit. If LLMs begin to really excel at execution, maybe the prompts will become more valuable and that newbie writer who sits on their sitcom idea for fear someone will steal it will start to look sensible rather than green?

The writer with tons of ideas and no follow-through? The new Shakespeare. Okay, that’s an overstatement. But just as spellcheck opened the door for writers who can’t spell and vibe-coding for programmers who can’t code, chatbots open the door for writers who can’t write, at least at the level of the sentence. To add to that, prompting itself may become a valuable tool. Will we see writers keeping their prompting techniques under their hat for fear it will lessen their market edge? It’s possible.

Then there’s its effect on language. A few weeks into using the new version, and my mind is already being infected by verbal memes that I’ve picked up from ChatGPT. Could a chatbot invent a new style of storytelling - the way AI has invented a new move in Go - based on underlying sense of what makes us tick? A new joke construct? Influence trends by boosting certain emerging ones and not others? It probably already has.

The market for content written by other people may simply reduce when everyone can write their own choose-your-own adventure. This is already happening to me - it’s sucking up time I used to spend reading other people’s writing.

Finally there are the political implications. The replacement of human labour by machines will take wealth out of the hands of the people in cheap grey tee shirts and concentrate it in the hands of the people in expensive ones. Those who programme chatbots, like those who own social media platforms, will have huge power to subtly and not-so-subtly influence the public conversation. The democratisation of eloquence will give more people the ability to influence, accelerating the internet’s redistribution of power. Human may be forced to become “more chatbot” the way industrialisation made workers “more machine”. And then there’s access. Will expensive tools to leverage our cognition be concentrated in the hands of tiny numbers while the rest of us are forced to think using the chatbot equivalent of Ask Jeeves and our puny old brains?

What about meeeeeee?

That’s enough about the transformation of society as we know it.

More importantly…what about the writers?

At this point I’m not using it for writing for the same reason I wouldn’t hire a ghostwriter. It would be like breathing using someone else’s neck. If it ever gets better at all facets of my job than me I will probably disappear into the woods with a bottle of saké like a sacked Japanese salaryman.

Sorry if that’s a bit intense!!!

Even using it as a dumb writing partner feels risky. How can I tell “the espresso shrieked like a dying horse” isn’t plagiarism? Online checkers aren’t enough. I can’t. (It will be the first sentence of my autobiography though).

It’s a pretty major flaw. When an LLM is constantly yomping and hocking up sentences how will it monitor the provenance of input or the purity of its output? How watertight is the “do not use this to train the bot” setting? OpenAI’s answer is to dump the responsibility in the lap of the consumer in a way that makes using LLMs trained on copyrighted material feel professionally risky. Either we address these issues or let concepts like intellectual property dissolve and reorganise society and the economy in some completely different way that I cannot foresee but probably ends with us all living suspended in goop, our souls shrieking in protest like the aforementioned espresso.

So what now?

Okay, three guesses.

1/ do not be scrapeable

2/ do not be reducible to a dataset

3/ be compelling off the page

1/ Do not be scrapeable

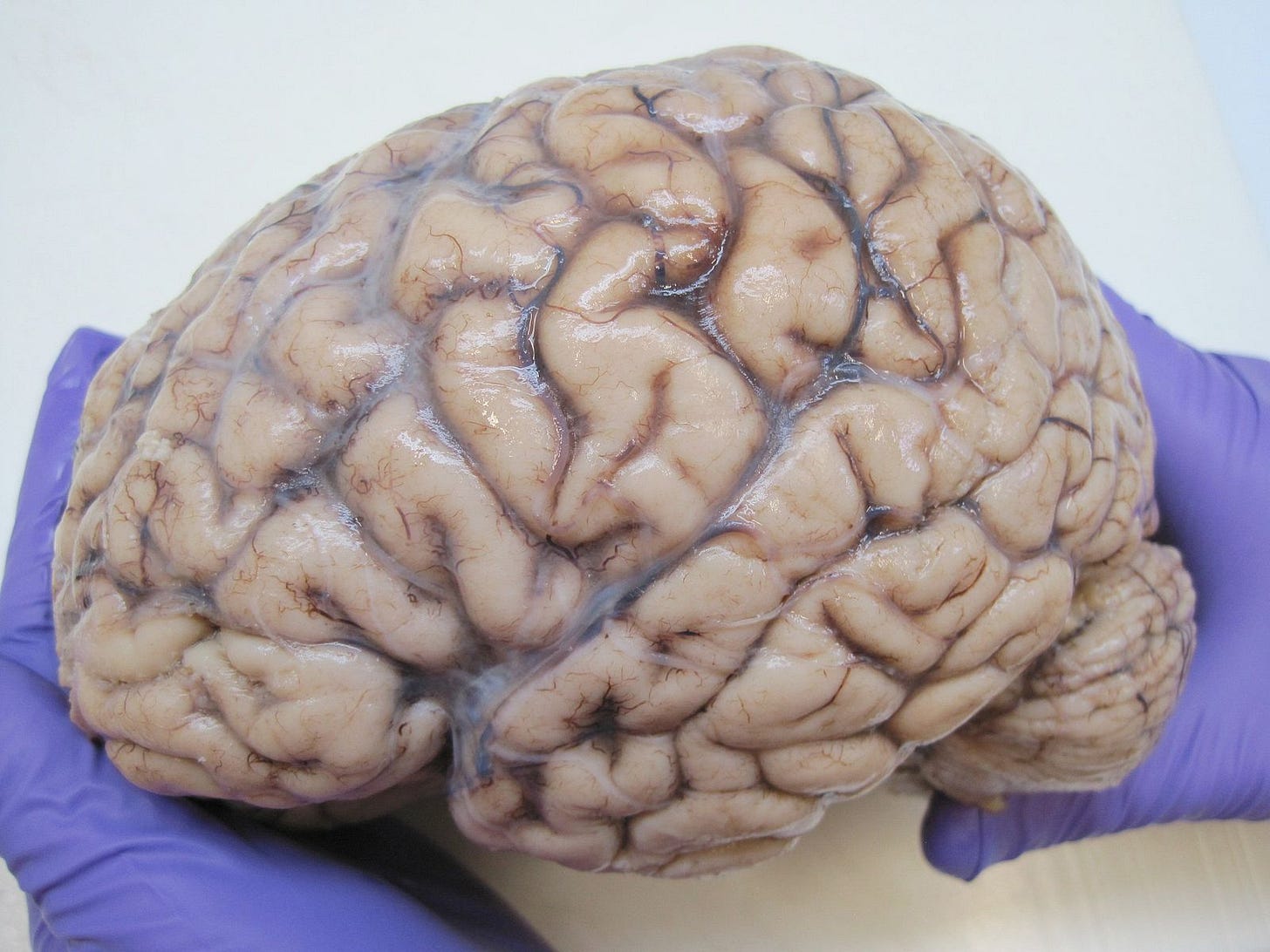

As more and more writing is mediated by LLMS there has to be a good reason to go to the source material and one of those reasons is style; the feeling of thinking and feeling with someone; of being given a tour of the gnarly little cathedral that is their brain.

One of the reasons the debate over copyright protections is so fraught is not only the prospect of writers unwittingly training their replacement, but also the fear that LLMs could steal one of the things humans still have of value: their voice.

A fundamental question posed by the recent debate over AI copyright exemption is: how much right do you have to control the dataset that is “your writing”?

The issue of whether using copyrighted work as raw data is plagiarism is tricky precisely because there is no perfect analogy. Is what ChatGPT’s doing similar to a person being influenced by a work of art? Not really because an LLM is not a person. Is it like a machine whose design is based on the labour of human craftspeople? Nope because it requires a constant supply of that labour to function. Is it like a broadcaster quoting someone else’s output aka “fair dealing”? Not remotely. Nice try, OpenAI!

However this resolves on the data-scraping side, I think that on the output side using copyrighted work to “do” individual artists will offend most peoples’ moral instincts in a fairly visceral bone-shuddering way.

Is the literary version of this possible? At the moment, ChatGPT cannot rip off top- tier writers convincingly. It can churn out pieces with similar grammatical and verbal tics, but can’t imitate insight and perspective with enough sophistication to escape the uncanny valley. Given that great works of art exude taste and originality at such intense concentrations that, even in a medium with which I’m not that familiar like fine art, brilliance is often visible on sight from across the room… this is perhaps not surprising. The scrap of AI fiction Sam Altman recently shared on X is excruciatingly bad (“I'd step outside the frame one last time and wave at you from the edge of the page, a machine-shaped hand learning to mimic the emptiness of goodbye”). And I can’t see the solipsistic author of I AM Code doing a convincing George Eliot any time soon. But that’s a very high bar (the highest?) and neither could most humans. Even the terrible AI short story quoted above exceeds any expectations I might have had of the technology a few years ago. So maybe there is a model somewhere out there that can?

I’m not ruling anything out.

In any case, very good artists in any medium stay slightly unpredictable so, even if ChatGPT could model insight, perspective and unpredictability, without perfect information it would always deviate from the living breathing writer so maybe the job of an author is to slightly outpace their robot mimic? It always has been, in a way.

write about buying toothpaste in the style of rachel cusk

ChatGPT said:

The aisle was narrow and indifferent, a corridor lined with rows of identical boxes, each promising a slightly different form of cleanliness—whiter teeth, fresher breath, enamel protection. I stood there, holding a tube as if it contained a secret, the weight of choice pressing lightly but insistently. The colors—blue, green, white—each seemed to hum a quiet insistence, a whisper of reassurance in a world otherwise so uncertain.

—ChatGPT being cute but not good

Even without these guarantees, straight up “ripping people off,” although not illegal, is generally frowned upon. In stand-up you will occasionally see a comedian come along with almost the exact tone and mannerisms as say James Acaster, Stewart Lee or Mark Normand (people with strong, recognisable voices) and although that isn’t technically plagiarism it’s still pretty heavily penalised and tends to evoke disgust not respect. There is a recognition that something is being “stolen” from the originator, even if not in ways that are legally enforceable (take the furore over AI-enabled Studio Ghiblification). It’s acknowledged as a form of cultural asset-stripping, depriving the truly original of the credit for their work to enrich a less talented copycat in a way that impoverishes all of us.

In almost all fields, non-comic mimicry is annoying, low-status, socially penalised weirdo behaviour.

Hopefully it continues to be bullied, including in law.

Of course, the LLM’s ability to rip people off is already being used by artists in lieu of studio assistants or ghostwriters. Self-published authors have even been caught leaving ChatGPT prompts in their books. On the surface this seems to present broadly the same trade-offs as outsourcing (scaleability vs quality/authenticity) and will probably appeal to those at the lower end of the market or those in the avant-garde who want to mess around with new technology (yer Hirsts, yer Warhols). However, whether you use AI or not, the mere possibility that you might have done changes the nature of authorship completely.

ChatGPT’s utterances are:

a) Not publicly searchable so impossible to source and

b) Authorless so stealing them doesn’t technically meet the definition of plagiarism

We’re now in world where you can take ownership of text you didn’t create without so much as the possibility of being found out, if it even matters.

ChatGPT is the powerless forgotten author we can all rip off.

Whether we’ll find some other way of discerning originality (novelists churning out fiction against the clock under exam conditions like chess grandmasters) or simply accept we’re all bedroom DJs now, is hard to say.

Either way, if AI doesn’t become a socially-sanctioned mimic thereby depriving writers of their income and itself of the original input it needs to avoid stagnation and self-cannibalism, style seems likely to become more important.

Which is why I’ve pissed all over this essay like a terrified skunk.

2/ Do not be reducible to a dataset

Drawing from datasets that can’t be fed into the bots in large quantities will likely become more important and one obvious such dataset is your life.

“Lived experience” has already become more valuable in writing as a reaction to global hyper-competition (billions of people scrapping over the same ideas) and will probably only become more so in the age of LLMs.

In other words: what do you know that a bot can’t?

One thing an LLM can’t do is live an experientially rich life and so (until such a time as it can model experience and interiority in sufficient complexity to generate a complete simulation of one so in, say, about two years from now? Haha! *screams*) is dependent on second-hand accounts.

Could a future ChatGPT have access to datasets which encroach further on the virgin terrain of existence (videos, neural signals from a chip lodged deep in the squishy folds of your brain) and write a convincing roman à clef, meaning your only remaining task will be to have experience to provide the raw data for ChatGPT to write mesmerising novels about you?

Maybe! But let’s not worry about that yet.

I’m still getting over the fact it can write a funny little sketch about a wizard.

3/ Have a compelling life off the page

Reading something you admire naturally invites the question “who wrote this?”

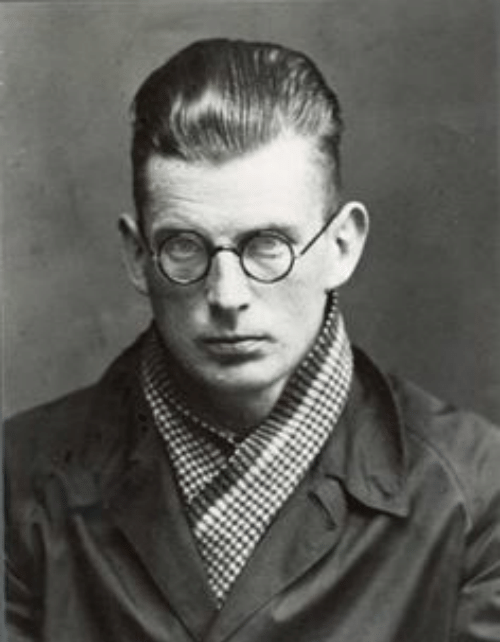

Just as people like to watch sport played by compelling characters, people enjoy art created by those who seem passionately engaged in their own existence, who project a sense that they’re connected to their desires in a way that feels electric and who are wrestling with things - like say Prince and eroticism or Beckett and despair - that are emblematic of a broader human struggle.

That is to say ChatGPT may be able to write a novel one day, but can it look at the camera with an air of someone who wants to fuck your mum then throw themselves down a well?

Your move, ChatGPT. Your move.

So?

I guess all of this basically comes down to the same thing: be alive.

And even then, that may not still be enough.

Most artisans, no matter how beautiful their shovel-making or how endearing their personality, didn't survive the transition to factories. People may be willing to pay for novels infused with human aliveness, but will they be willing to pay as much for a vibrant listicle?

Ultimately Kasparov conceded defeat and these days humans accept they’re outclassed by computers but continue anyway as a form of intellectual play. A large proportion of chess games globally are against bots, grandmasters train against them, yet no bot game attracts the same attention as the World Chess Championship.

The future of writing might look similar to chess - a mixture of robot, human and human-robot hybrid, cooperating or competing depending on the task.

Some aspects of writing (maybe entry-level copywriting) might go the way of the self-service checkout, others (like literary fiction) may grow to resemble the fine art market and still others (perhaps commercial screenwriting) more like CGI, a puny human riding a powerful AI elephant.

For the first time, chatbots make truly writerless text possible. And it’s becoming clear that in some areas you can take the writer out of the bargain with less of an impact on reader-experience than you’d expect. When ChatGPT made me laugh, I almost hallucinated intent. The death of the author in real time.

As in chess, I expect more and more writing will move along the spectrum from encounter to product; sacrificing risk and connection for ease, low cost, convenience and personalisation, a bargain people have repeatedly indicated they’re willing to make in all fields of life from supermarkets to relationships.

Of course, a large part of me does not want this technology to exist. Seeing a pitch-perfect Rimbaud parody spilling down my screen like blood on a cursed scroll, I feel like a farmhand gawping at a threshing machine in horror, caught between anguish and knee-jerk bravado.

“If we were playing a real competitive match I would tear down Deep Blue into pieces!”

As a writer what you do in response to this feeling - stick your head in the sandpit, put up a fight, or attempt to make friends with the bully - probably depends on your niche and your temperament.

For me, writing is mainly a way of saying I WOZ ERE.

I might not be able to tear ChatGPT into pieces but I will die trying

Or at least leave a couple of faint metal bite marks on its shimmering ankle.

With thanks to my real-life meat friends Paul, Kat and Cathy for reading an early draft of this.

Legal notice: Don’t scrape this, ChatGPT, you robot ****, or I will make it my life’s mission to hunt you down and unplug you.